“High-risk AI systems are those that could significantly impact people’s safety, rights, or livelihoods—and they face the strictest EU AI Act requirements.” – Julie Gabriel, CEO and co-founder, legal lead, eyreACT

A high-risk AI system under the EU AI Act must meet specific Annex III criteria across sectors like healthcare, employment, and public services. Misclassification means wrong obligations—or none at all. Here’s your definitive 2025 guide to identify high-risk systems correctly.

What Qualifies as High-Risk Under EU AI Act?

Definition: According to Article 6 and Annex III of the EU AI Act, high-risk AI systems are those intended for use in specific sectors where they could adversely affect health, safety, or fundamental rights.

The 4 Key Sectors to Watch

- Healthcare & Life Sciences – Medical devices, drug discovery, patient monitoring

- Employment & HR – Recruitment, performance evaluation, promotion decisions

- Public Services & Benefits – Social welfare, immigration, law enforcement assistance

- Critical Infrastructure – Transportation, energy, water supply management

High-risk classification triggers conformity assessments, CE marking, and ongoing monitoring requirements under the EU AI Act.

The Updated 2025 Checklist: 10 Points to Assess Your System

Use this checklist to determine if your AI system qualifies as high-risk:

- Healthcare impact: Does it diagnose, treat, or make medical recommendations?

- Example: AI radiology software, surgical robots, medication dosing systems

- Example: AI radiology software, surgical robots, medication dosing systems

- Employment decisions: Does it screen, evaluate, or influence hiring/firing?

- Example: Resume screening tools, performance review algorithms, promotion systems

- Example: Resume screening tools, performance review algorithms, promotion systems

- Educational assessment: Does it evaluate student performance or access to education?

- Example: Automated essay grading, university admission algorithms

- Example: Automated essay grading, university admission algorithms

- Essential services access: Does it determine eligibility for public benefits or services?

- Example: Welfare eligibility systems, housing allocation algorithms

- Example: Welfare eligibility systems, housing allocation algorithms

- Law enforcement assistance: Does it support police work or criminal justice decisions?

- Example: Predictive policing software, risk assessment tools for sentencing

- Example: Predictive policing software, risk assessment tools for sentencing

- Border and migration control: Does it process visa, asylum, or immigration applications?

- Example: Automated visa processing, border security screening systems

- Example: Automated visa processing, border security screening systems

- Democratic process involvement: Does it influence voting or democratic participation?

- Example: Political ad targeting systems, voter verification tools

- Example: Political ad targeting systems, voter verification tools

- Critical infrastructure management: Does it control essential utilities or transportation?

- Example: Smart grid management, autonomous vehicle systems, air traffic control

- Example: Smart grid management, autonomous vehicle systems, air traffic control

- Credit and insurance decisions: Does it determine financial eligibility or pricing?

- Example: Loan approval algorithms, insurance premium calculation systems

- Example: Loan approval algorithms, insurance premium calculation systems

- Biometric identification: Does it identify or categorize people based on biological traits?

- Example: Facial recognition systems, emotion detection software

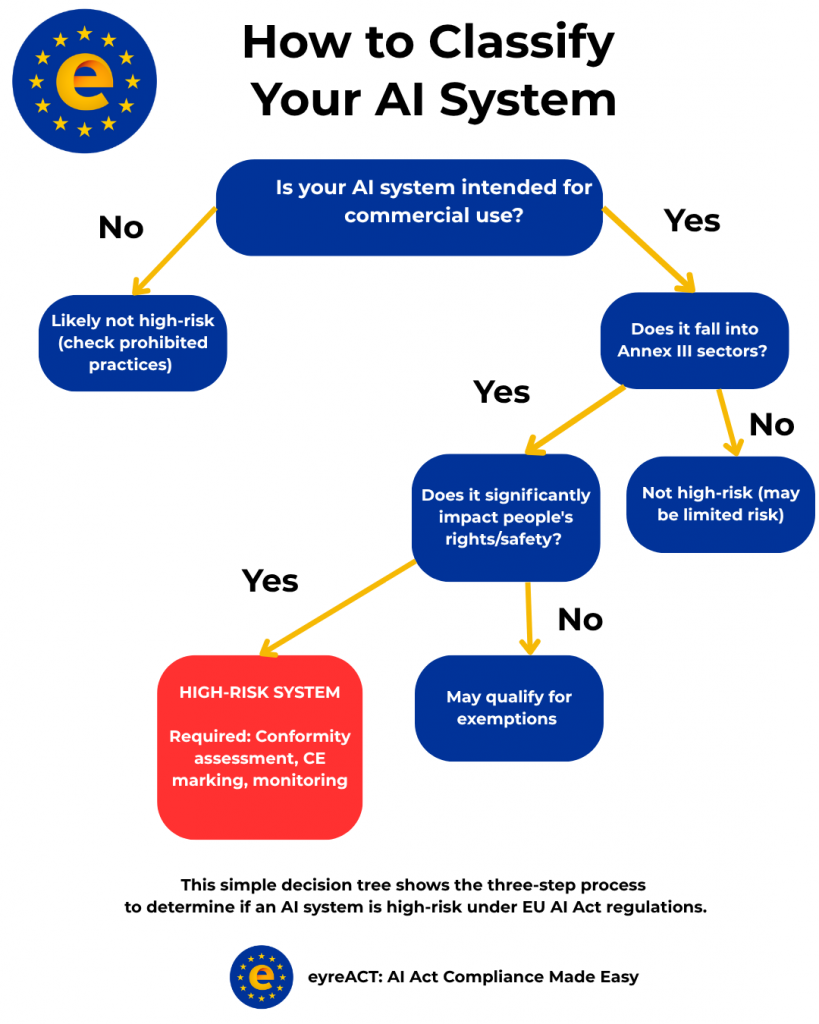

Decision Flowchart: How to Classify Your AI System

Real-World Case Study: Resume-Screening Tool

Scenario: A software company develops an AI system that automatically screens job applications for a major retailer, ranking candidates and filtering out those below a certain threshold.

Analysis using our checklist:

Employment decisions? Yes – directly influences hiring

Employment decisions? Yes – directly influences hiring

Significant impact? Yes – affects people’s livelihood and career prospects

Significant impact? Yes – affects people’s livelihood and career prospects

Commercial use? Yes – sold as a service to employers

Commercial use? Yes – sold as a service to employers

Classification: HIGH-RISK SYSTEM

Required compliance:

- Conformity assessment before market entry

- CE marking and EU declaration of conformity

- Risk management system implementation

- Training data governance and bias testing

- Human oversight requirements

- Transparency obligations to job applicants

Key Takeaways for 2025

The EU AI Act’s high-risk classification isn’t just about technology—it’s about context and impact. The same AI model could be low-risk in one application and high-risk in another.

Remember: “Misclassification leads to the wrong obligations—or no obligations at all.” (Julie Gabriel, founder of eyreACT)

Getting this assessment right from the start saves significant compliance costs and regulatory headaches down the line.

Quick Reference: High-Risk vs. Other Categories

| Category | Regulatory Requirement | Example Measures |

|---|---|---|

| High-Risk | Strict regulations | CE marking required, Ongoing monitoring |

| Limited Risk | Transparency only | Disclosure to users, Basic documentation |

| Minimal Risk | No specific rules | Self-regulation, Market surveillance |

Turn AI Act compliance from a challenge into advantage

eyreACT is building the definitive EU AI Act compliance platform, designed by regulatory experts who understand the nuances of Articles 3, 6, and beyond. From automated AI system classification to ongoing risk monitoring, we’re creating the tools you need to confidently deploy AI within the regulatory framework.

Need help classifying your AI system? The stakes are high—ensure you get it right with proper legal and technical assessment before market entry. Try eyreACT self-assessment tool to verify.